CS 6610 Spring 2015 - Final Project

Description

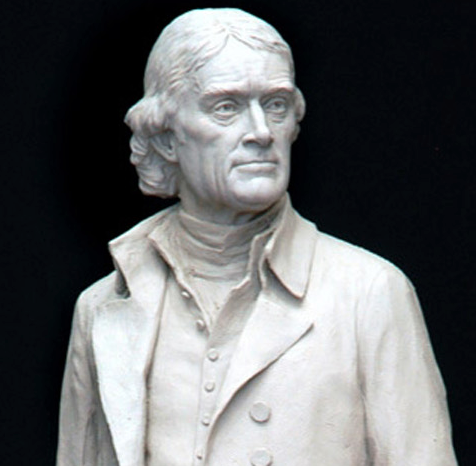

I chose to implement Scalable Ambient Obscurance [MML12] for my project, which is a fast improved screen space ambient occlusion method. This method improves performance and quality of a method introduced previously by McGuire et al., the Alchemy Ambient Obscurance algorithm [MOBH11]. Ambient occlusion methods attempting to approximate the effect of decreased ambient/environment light illuminating inside crevasses on an object. The effect that's being implemented can be seen as the illumination of an object on a cloudy day, like the statue below.

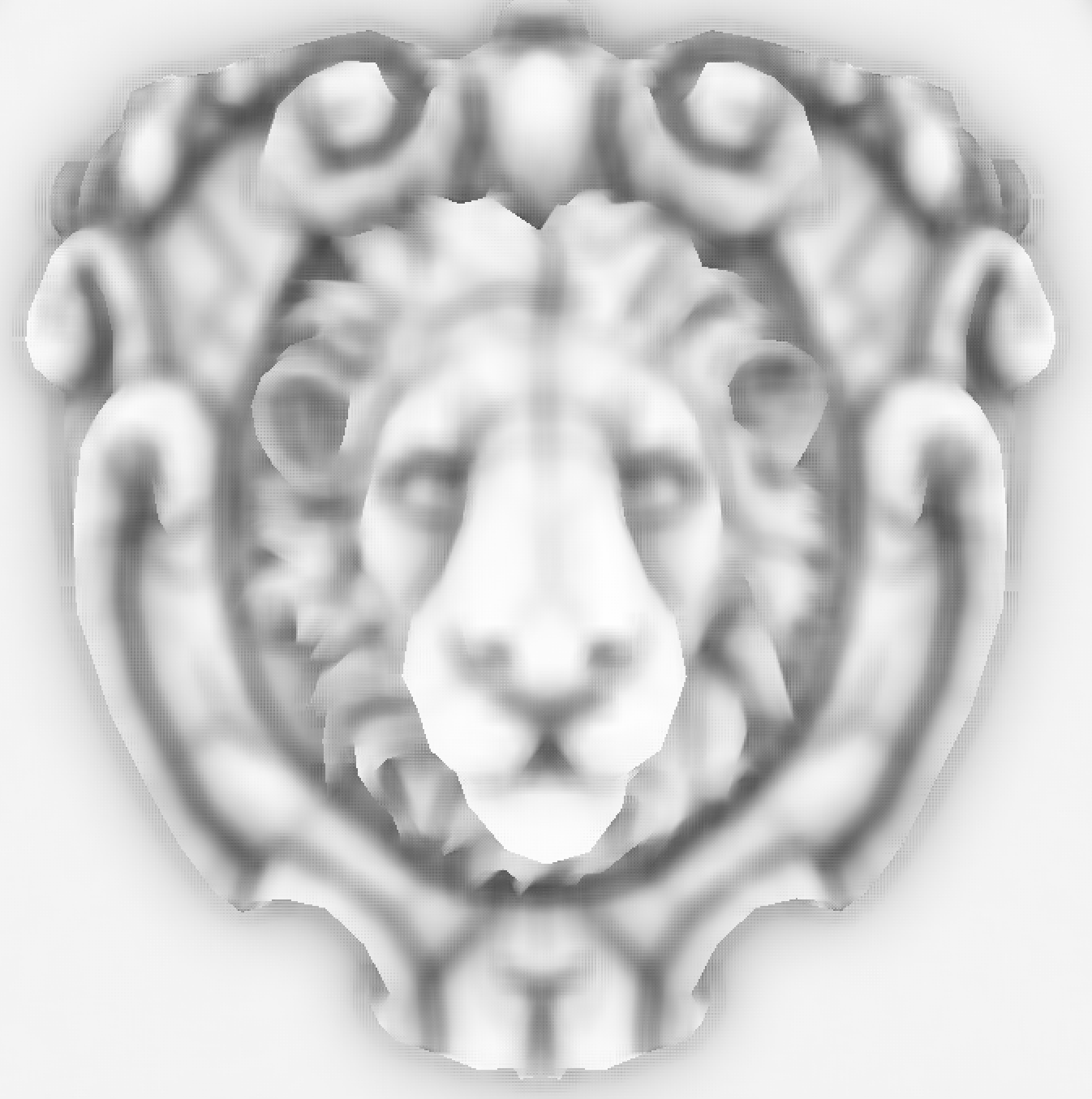

The areas we're interested in here are the shadows beneath the collar, in the creases in the neck, where the jacket meets the shirt and in the facial features. For comparison the lion head from the Crytek Sponza scene is shown, displaying the value of the ambient obscurance light modulation term. Note especially the darkening within the crevasses on the model, giving a similar effect to that on the photo of the statue.

Slides of [MML12, MOBH11]

Geometric Construction

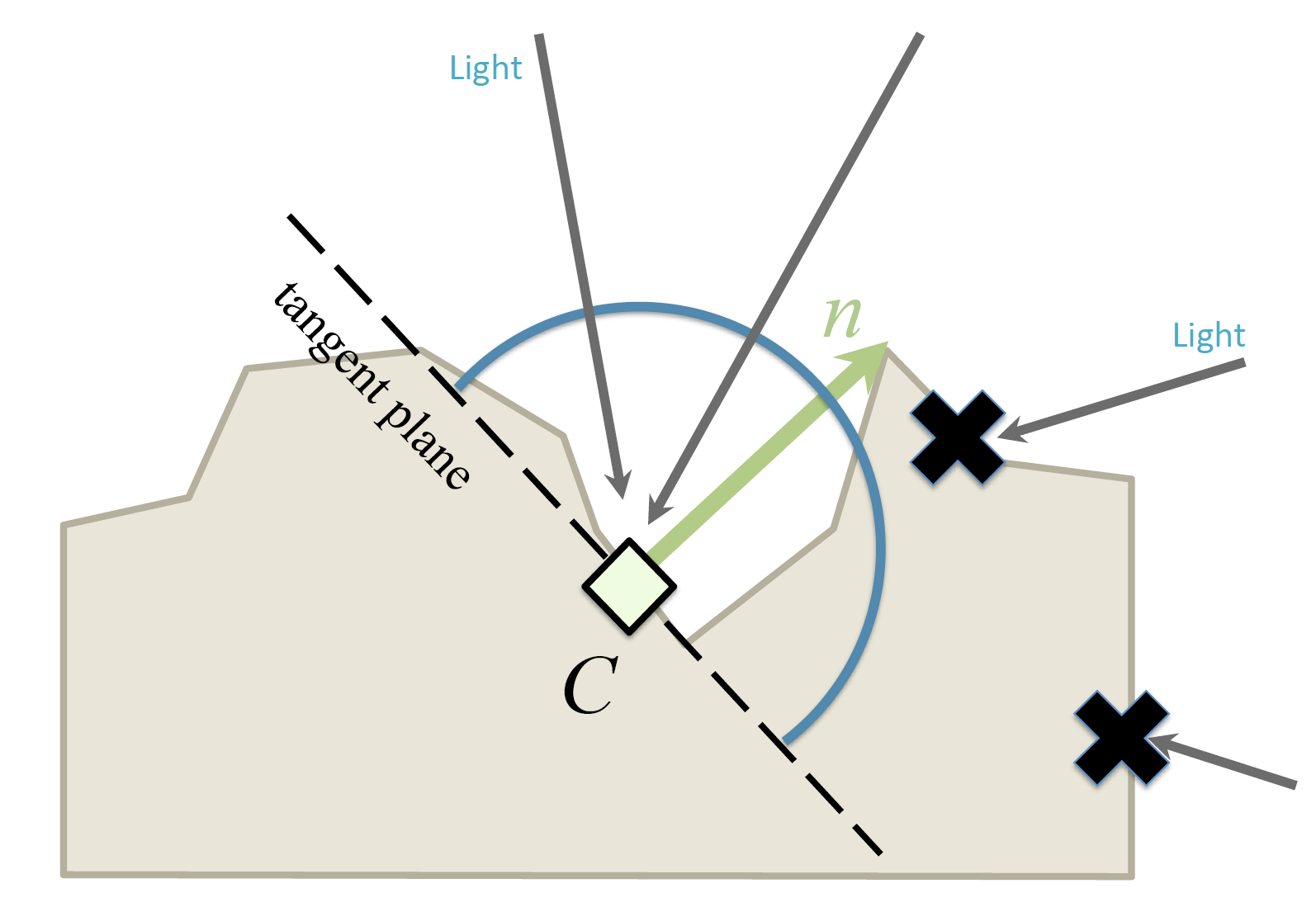

The geometric construction of the effect follows from an approximation of how ambient occlusion works. A hemisphere is placed at the point aligned with the surface normal and we determine how much of this hemisphere has objects in it that could be blocking environment light incident on this point. Due to this approximation the accuracy of the method is heavily dependent on the hemisphere capturing the local effects properly.

[MML12, MOBH11]

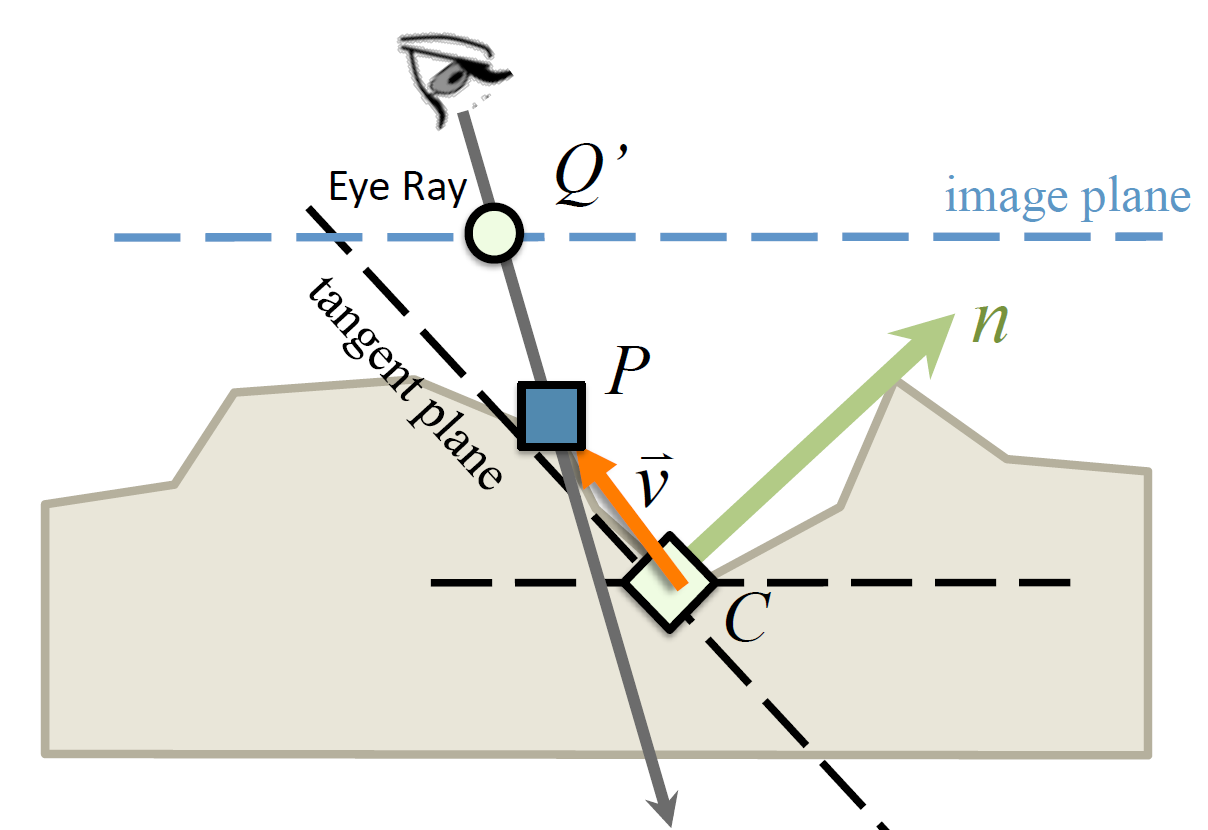

The Scalable AO (and Alchemy AO) methods choose some number of points around the hemisphere and compute a vector from the point we're computing the AO value for. This gives us information about the distance to the point and the difference in depth between the points, eg. if this point \(P\) would block ambient light from reaching \(C\) where the distance gives us an idea of how much it effects \(C\). For performance reasons this computation is done in screen space using information from the scene's depth buffer to reconstruct camera space positions where we sample some pixel to find \(C\) and then sample on a circle of pixels around it to find neighboring points \(P_i\).

[MML12, MOBH11]

The ambient occlusion at a point \(C\) is computed by taking \(s\) samples distributed on a hemisphere around the point and recovering their camera space position \(P_i\). We then find \(v_i = P_i - C\) and sum up the occlusion contributions of each sample. On a simplified level the dot product \(v_i \cdot \hat{n_C}\) tells us how far in front of the surface \(P_i\) is. A small amount of bias is also applied in the form of \(\beta\) to prevent self shadowing, similar to what's done in shadow mapping bias. This contribution is then divided by the length of \(v_i\) to reduce the shadowing contribution of points further away from the point being computed, the \(\varepsilon\) term is a small value used to prevent division by zero. See [MOBH11] for full details of the derivation of the ambient occlusion estimator used, shown below.

$$ A(C) = \text{max}\left(0, 1 - \frac{2 \sigma}{s} \sum_{i = 1}^{s} \frac{\text{max}(0, \vec{v_i} \cdot \hat{n_C} + z_C \beta)} {\vec{v_i} \cdot \vec{v_i} + \varepsilon}\right)^\kappa $$Implementation Details

My project takes advantage of a few recent OpenGL features like MultiDrawIndirect, shader storage buffer objects and texture arrays. By using these features it becomes possible to draw the entire sponza scene below using a single call to MultiDrawIndirect. This is achieved by packing all textures used by the objects in the scene into as few texture arrays as possible and storing information about material properties and texture location and index in a SSBO. Additionally all model data for objects in the scene are packed into a single buffer which contains the vertex and index data. The end result of this is that we can pass a per-instance parameter specifying which material to use and the object can access its material properties and texture data through the SSBO and texture arrays passed, allowing us to draw all objects in the scene with a single draw call.

The Crytek Sponza model itself is not very complex by modern standards but has some nice texture work that make it look very nice, including some techinques we learned about in class. The images rendered include bump mapping, alpha mapping and specular mapping effects along with a typical diffuse texture map.

My scalable AO implementation is not quite scalable AO as described in the paper since I've chosen to be a bit lazy and not bother with reconstructing camera space positions from the depth buffer and to instead just render the positions out to an RGB32F texture. This allows me to not worry about the various precision issues discussed in the paper however it also impacts the performance of my method a decent bit due to the increased bandwidth usage. I also was curious what it would look like using the bump mapped normals to compute AO and provide an option to toggle this, however I didn't find the results very nice. McGuire et al. also recommend a new AO estimator over the Alchemy AO estimator used originally but I couldn't get this new estimator to behave very well. This new estimator is supposed to smooth the transition into shadow and is discussed at the end of [MML12] and in their slides from HPG.

Results

To make it easier to compare against the original paper I rendered the Crytek Sponza scene used by McGuire et al. to demonstrate their results in [MML12]. My implementation is not as fast as the paper's since I've left some parameters tune-able and am passing the camera space positions directly in an RGB32F buffer, thus consuming much more bandwidth vs. reconstructing positions from a 32F depth buffer. There are also areas where the quality of my implementation could be improved, the blur and smoothness of the ambient occlusion looks much better in [MML12]'s implementation. The ambient occlusion effect can be a bit subtle in some areas, the best way to see the difference is to open the full and no AO images in separate tabs and flip between them to compare.

References

[MML12] McGuire, M., Mara, M., and Luebke, D.:

Scalable Ambient Obscurance.

In High Performance Graphics 2012.

[MOBH11] McGuire, M., Osman, B., Bukowski, M.,

and Hennessy, P.: The Alchemy Screen-Space Ambient

Obscurance Algorithm. In High Performance Graphics 2011.